Technology Services is building a culture of greater awareness and understanding surrounding data privacy at the University.

Since 2020, Privacy at Illinois has hosted the Privacy Everywhere conference. This year’s conference participants were able to benefit from sessions about privacy engineering, online advertising and privacy, sharing best practices among Big Ten universities, and others.

Privacy thought leaders shared expertise and experience and fueled thought-provoking conversation. Here’s some of what they covered.

Your data on the auction block: what it means for you and national security.

You may have a general idea that your personal data is collected when you interact with a company online. You may even know that your data is frequently sold for marketing and research purposes, but you may not know the extent to which that information about you is being shared and re-shared; sold and resold. Further, you may not understand the breadth of data points available about you.

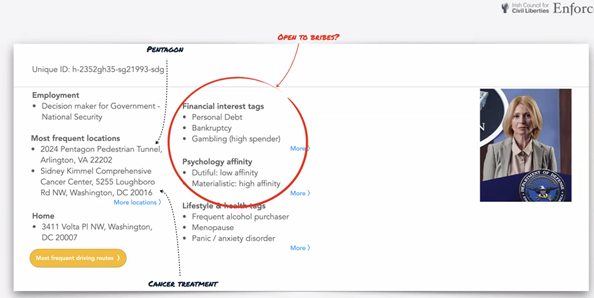

Privacy activist Dr. Johnny Ryan is a Senior Fellow at the Irish Council for Civil Liberties and Senior Fellow at the Open Markets Institute. He provided insight into the path your data can take when you use the world’s largest and most popular search engine to make a routine online purchase.

Ryan showed that some of the data points available in the marketplace through a process called Real Time Bidding have not only personal but national security implications. Information he was able to see—that data brokers can easily obtain—include whether you work for a government agency or for a company that builds systems or products for the government. Data is also available about your financial status—debts, child support owed, etc. A bad actor with both those data sets or other potentially embarrassing or compromising information might have leverage over an individual they wish to blackmail in exchange for sensitive government information. Adding an additional layer of complexity to the already complicated real time bidding process is that many data brokers are individuals or companies based in China or Russia.

He is presently advocating with the European Union to limit or eliminate the availability of this type of data on the open market.

AI and large language models are here with privacy implications we should know about.

Jay Averitt, a Senior Privacy Product Manager at Microsoft, and Saima Fancy, Senior Privacy Specialist at Ontario Health, shared their perspectives about privacy implications in the new world of AI.

They touched on the speed with which Large Language Models (LLMs) have appeared on the scene. Averitt said that they have just come in a whirlwind and that data privacy is a huge issue with the amount of data going into these models. Fancy posited that LLMs are being released prematurely. “There is a lot of hallucination in the output. In the health sector, people are putting personal information into it not realizing that the data will sit with the LLM and will be used for further LLM training. We have not had time to educate people because they are coming out rapid fire,” she added.

Averitt noted that social media poses an interesting set of issues and there is a tradeoff. “Maybe you don’t have to have absolute privacy because there are some good aspects to social networks. It can be about striking a balance. How much privacy do you want to give up for the product?” he said.

Fancy echoed those sentiments and added that everyone should recognize that privacy is a fundamental human right you need to protect. “Even if there is some info available about you already, you don’t need to add more. Be your own advocate. Balance your interests with the interests of your family. We don’t have to open our entire book, she explained.”

Averitt and Fancy also discussed how we must come up with solutions to protect data being shared with LLMs. “Maybe we ensure we are not storing the prompts or training the models? If that model needs that data, we should use anonymized data to train it,” Averitt suggested.

Fancy pointed to the EU’s General Data Protection Regulation (GDPR) as an example for privacy protection. “GDPR allows individuals to reject being subjected to automatic decision making. North America needs something like GDPR,” she explained.

Averitt agreed and mentioned that we are playing catch up in the U.S. “I look at whether we are collecting data the right way, storing it the right way. It is about poor data collection in the AI space. It would be great if the AI boom would help get a federal privacy stature in place in the U.S.,” he said.

The more we know about what happens with our data, the more power we can have over it and its use. Here are takeaways you can use to actively engage with data privacy.

- Personal – Your data is up for auction over and over.

Familiarize yourself with how and when your personal data is used by companies and organizations you interact with online. Understand the tradeoffs involved in these interactions. By sharing certain data, you may be giving up some privacy for the sake of convenience. - Professional – AI is here and it is changing at a pace that U.S. privacy regulations have not matched.

Baking privacy into AI programs upfront will enable and improve both the AI program and individual’s privacy. Until then, either avoid adding your personal data into AI systems or make informed decisions about the data you do share. Ensure you have the authority to use AI for sensitive or high-risk data, especially personally identifiable and health information that is not your own. These systems use the data they receive to train on. More dialogue is needed in higher education about best practices with AI and how it can beneficially assist individuals in their learning goals while maintaining privacy principles.

- Privacy professionals and researchers are your advocates for better transparency and trust.

Privacy at Illinois aims to make data privacy top of mind. As software and AI investments come up for purchase and review, the privacy team encourages vendors and developers to take a privacy is baked into the product and process approach.

Privacy engineering envisions privacy as a competitive and strategic advantage to drive true innovation in a digital and data driven world. Privacy engineering incorporates technical design and architectural privacy into software development, data projects, and technology. It vastly increases protection of private and personal information – often by not collecting personal details or by highly limiting how sensitive/personal information is collected, processed, stored, and used.

Thinking strategically about data management can make data available that previously might not be, but in a principled way. Learn more about privacy policies and practices and how Illinois privacy professionals can help with in your work at Illinois.